Launching Kubernetes Cluster using Ansible on AWS

What is Kubernetes ?

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation. It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available.

Why you need Kubernetes and what it can do ?

Containers are a good way to bundle and run your applications. In a production environment, you need to manage the containers that run the applications and ensure that there is no downtime. For example, if a container goes down, another container needs to start. Wouldn’t it be easier if this behavior was handled by a system? That’s how Kubernetes comes to the rescue! Kubernetes provides you with a framework to run distributed systems resiliently. It takes care of scaling and failover for your application, provides deployment patterns, and more. For example, Kubernetes can easily manage a canary deployment for your system.

Kubernetes provides you with:

- Service discovery and load balancing

- Storage orchestration

- Automated rollouts and rollbacks

- Automatic bin packing

- Self-healing

- Secret and configuration management

What is Ansible?

Ansible is a configuration management system written in Python using a declarative markup language to describe configurations. It is used to automate software configuration and deployment.

Ansible Architecture :

Ansible Playbooks :

Ordered lists of tasks, saved so you can run those tasks in that order repeatedly. Playbooks can include variables as well as tasks. Playbooks are written in YAML and are easy to read, write, share and understand.

Inventory :

A list of managed nodes. An inventory file is also sometimes called a “hostfile”. Your inventory can specify information like IP address for each managed node. An inventory can also organize managed nodes.

Let’s Begin…

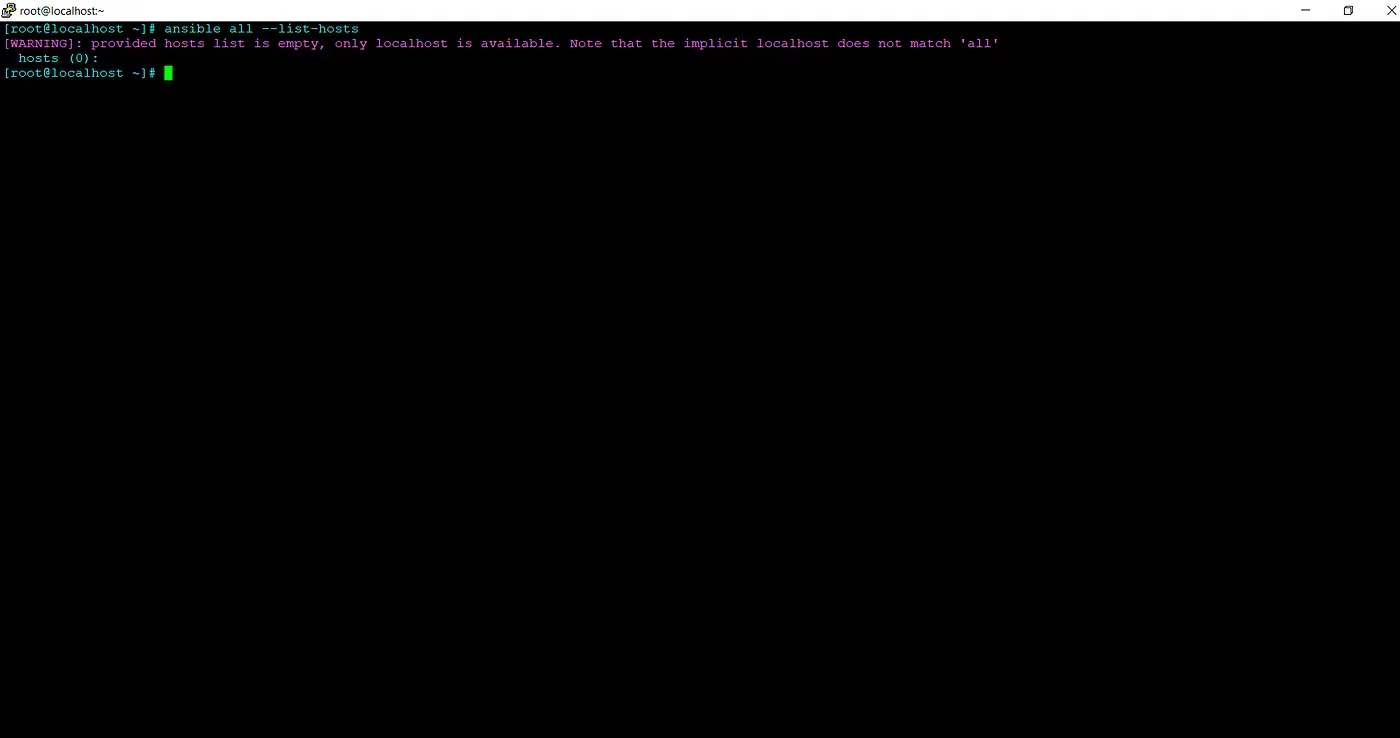

We don’t have any hosts or instances running

Launching EC2 Instances using Ansible with dynamic inventory :

- Create Role :

ansible-galaxy init ec2- In tasks file :

ec2: key_name: "{{ key_name }}" instance_type: "{{ instance_type }}" image: "{{ image_id }}" wait: yes count: "{{ count }}" # instance_tags: # name: "sample_os" vpc_subnet_id: "{{ subnet_id }}" assign_public_ip: yes state: present region: "{{ region }}" group_id: "{{ sg_group_id }}" aws_access_key: "{{ aws_access_key }}" aws_secret_key: "{{ aws_secret_key }}" instance_tags: Name: "{{ item }}" loop: "{{ OS_name }}"- In vars file :

aws_secret_key: "aws_secret_key"key_name: "testing"image_id: "ami-038f1ca1bd58a5790"count: 1subnet_id: "subnet-52bc140d"region: "us-east-1"sg_group_id: "sg-0639b0dd0a69545ea"instance_type: "t2.micro"OS_name: - "K8S_Master" - "K8S_Node1" - "K8S_Node2">>> In playbook setup.yml :- hosts: localhost gather_facts: False #vars_files: secret.yml roles: - name: "EC2 Launch" role: /root/task23/k8s/ec2/- Run the playbook :

ansible-playbook setup.yml

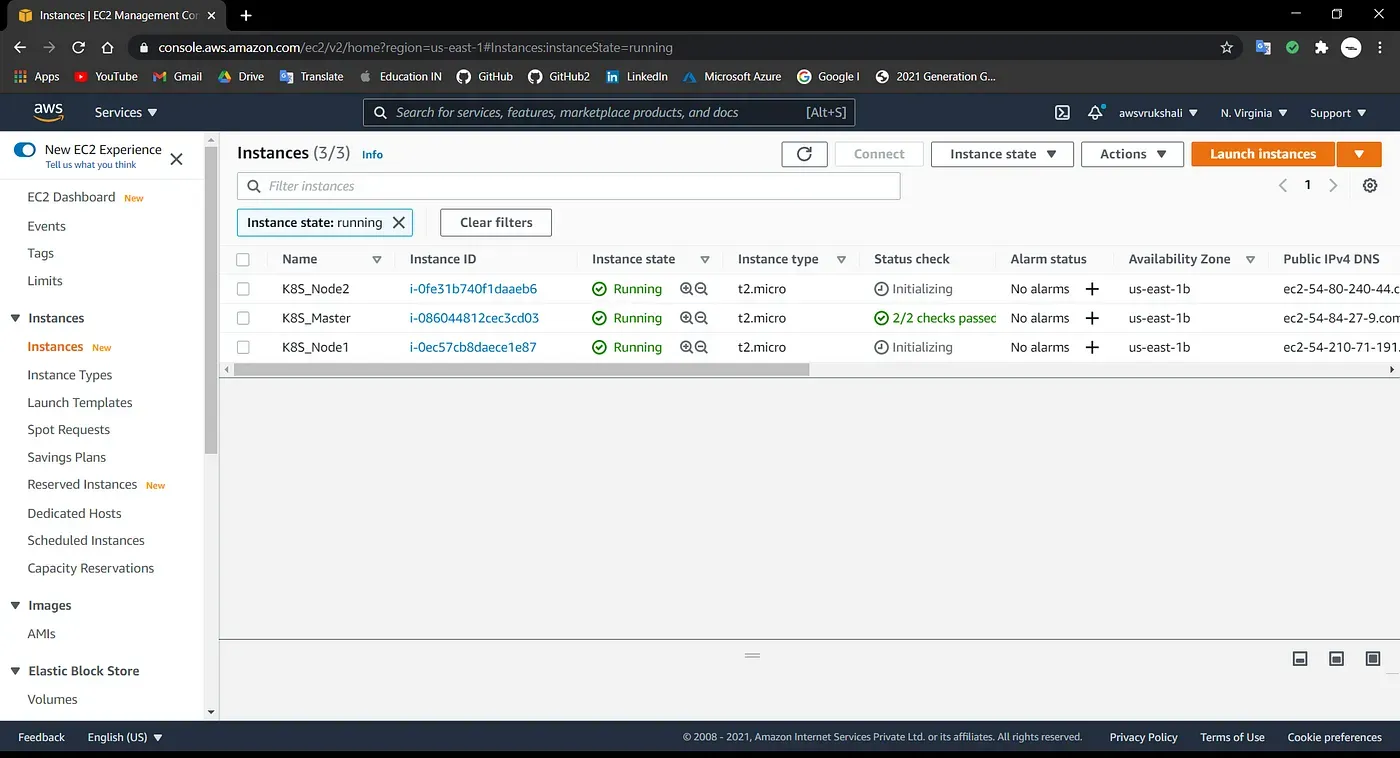

- Instances launched and hosts file is been updated

Setting Up Master Node and Worker Nodes

In Master Node,

- Create Role :

ansible-galaxy init k8s_master- In tasks file :

- name: "Creating Repo for Kubernetes" copy: src: kubernetes.repo dest: /etc/yum.repos.d/kubernetes.repo- name: "Installing Software" package: name: "{{ item }}" state: present loop: "{{ package_name }}"- name: "Starting services" service: name: "{{ item }}" state: started loop: "{{ package }}"- name: "Changing driver to systemd" copy: src: daemon.json dest: /etc/docker/daemon.json- name: "Restart Docker Services" service: name: docker state: restarted- name: "Pulling Images" shell: kubeadm config images pull- name: "Bridge to 1" shell: echo "1" > /proc/sys/net/bridge/bridge-nf-call-iptables- name: "kubeadm init" shell: kubeadm init --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors=NumCPU --ignore-preflight-errors=Mem ignore_errors: yes- name: "Creating .kube directory" file: path: $HOME/.kube state: directory- name: "Copying file" shell: cp -i /etc/kubernetes/admin.conf $HOME/.kube/config- name: "Changing Owner Permissions" shell: chown $(id -u):$(id -g) $HOME/.kube/config- name: "Setting up Flannel" shell: kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml changed_when: False- name: "Token Creation" shell: kubeadm token create --print-join-command register: token- name: "Printing Token" debug: var: token.stdout- In vars file :

package_name: - "docker" - "kubelet" - "kubeadm" - "kubectl" - "iproute-tc"package: - "docker" - "kubelet"In Worker Nodes,

- Create Role :

ansible-galaxy init k8s_nodes- In tasks file :

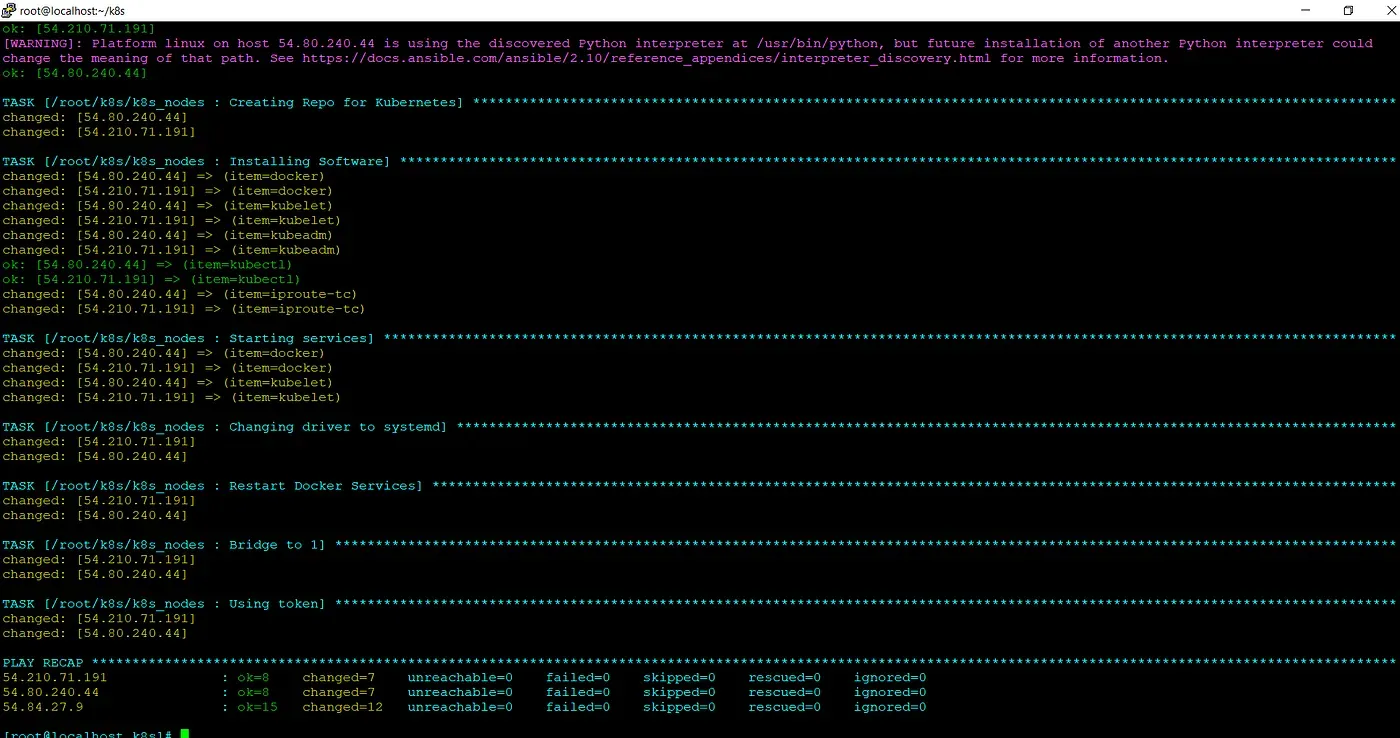

- name: "Creating Repo for Kubernetes" copy: src: kubernetes.repo dest: /etc/yum.repos.d/kubernetes.repo- name: "Installing Software" package: name: "{{ item }}" state: present loop: "{{ package_name }}"- name: "Starting services" service: name: "{{ item }}" state: started loop: "{{ package }}"- name: "Changing driver to systemd" copy: src: daemon.json dest: /etc/docker/daemon.json- name: "Restart Docker Services" service: name: docker state: restarted- name: "Bridge to 1" shell: echo "1" > /proc/sys/net/bridge/bridge-nf-call-iptables- name: "Using token" shell: "{{ token }}">>> In vars file :package_name: - "docker" - "kubelet" - "kubeadm" - "kubectl" - "iproute-tc"package: - "docker" - "kubelet"- In playbook k8s_setup.yml :

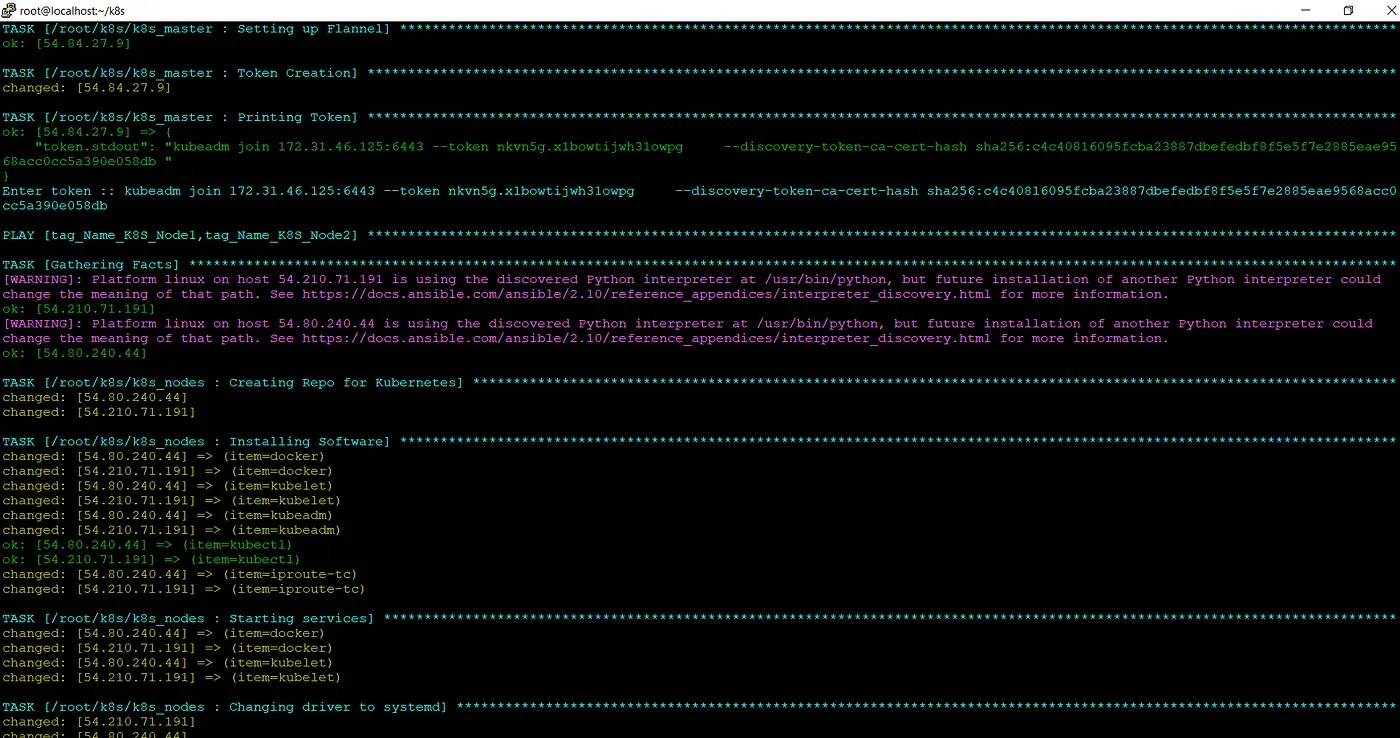

- hosts: "tag_Name_K8S_Master" roles: - name: "K8S Master" role: /root/k8s/k8s_master- hosts: ["tag_Name_K8S_Node1", "tag_Name_K8S_Node2"] vars_prompt: - name: token prompt: "Enter token :" private: no roles: - name: "K8S_Nodes" role: /root/k8s/k8s_nodes- Run the playbook :

ansible-playbook k8s_setup.yml

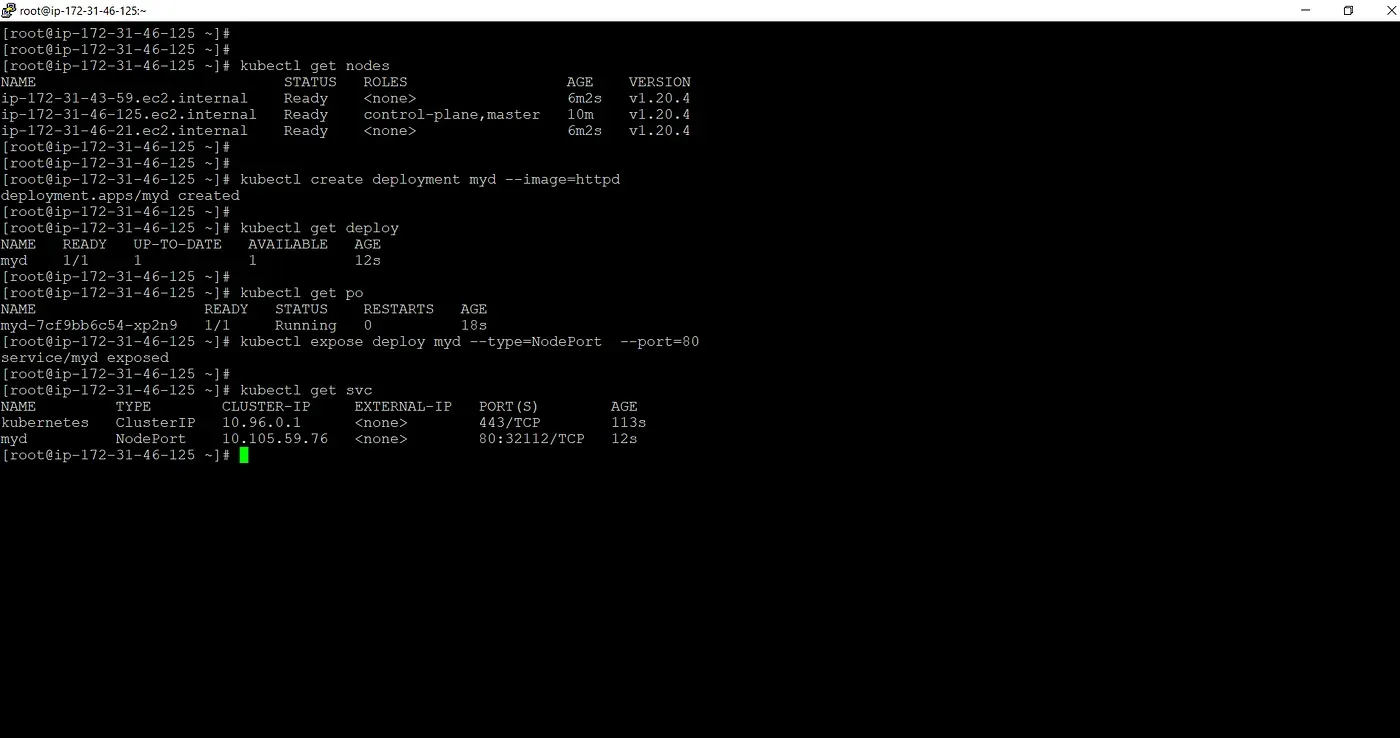

- In AWS instance Master Node , All the nodes are ready and we can deploy the pods

kubectl get nodes

- Finally on browser ,

http://<master_ip>:<port_no>

...

Further Reading

- The Lazy Solution! 02.May.2025

- Debugging Pandas Unexpected Type Conversion 07.Feb.2025

- Part-2 More about Docker 27.Feb.2023

...